Batching Grass

July 15, 2025

For the last couple of years, on evenings and weekends, I have been building a game in Unity. It’s a great way to get some coding practice and I have learned a huge amount in that time.

The most complex, performance intensive and mentally challenging aspect of the whole exercise has been… grass!

This blog tells the story of how, over the space of a year or two, I improved grass batching and rendering to get the best looking grass for the lowest CPU time.

Grass

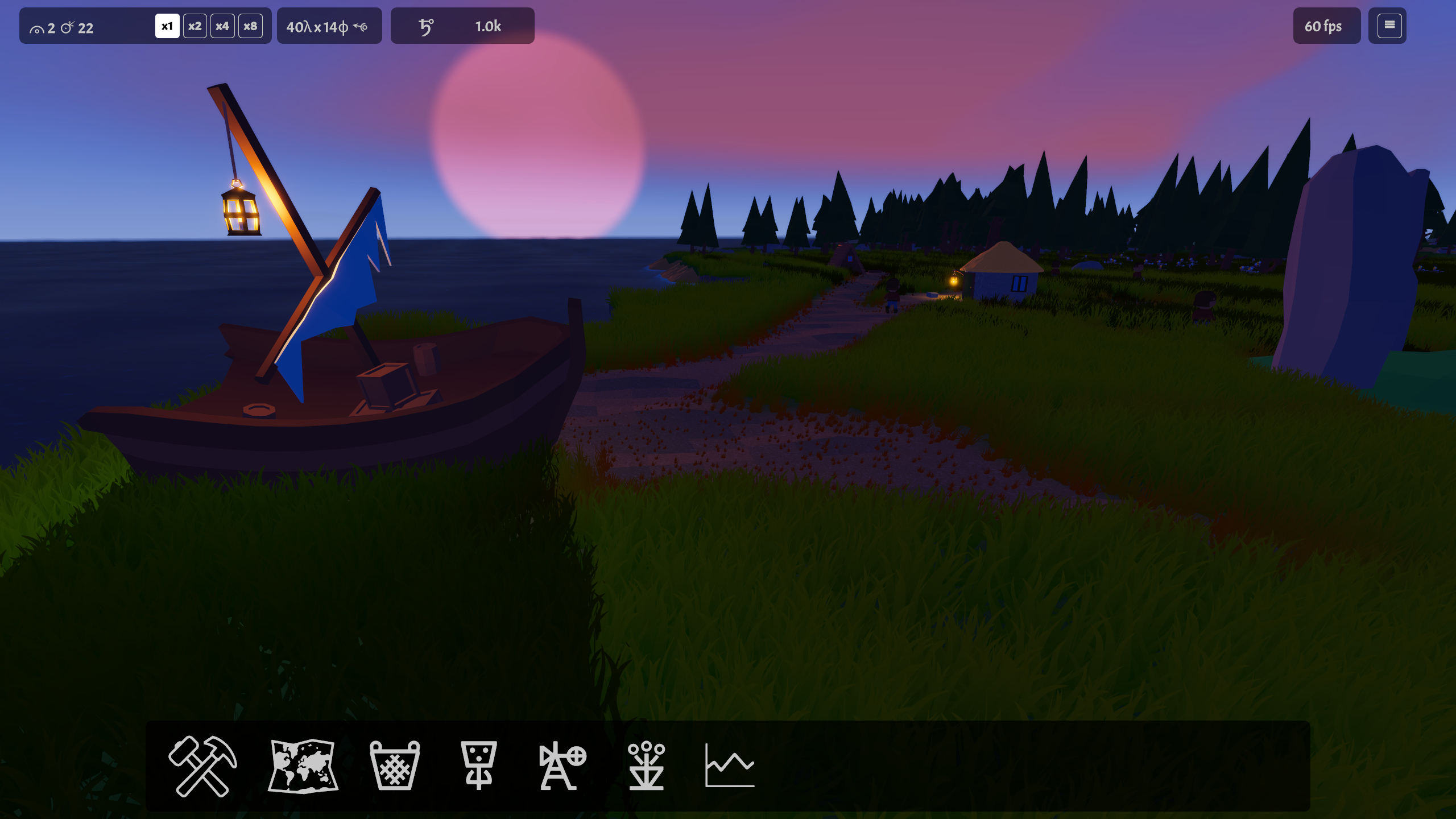

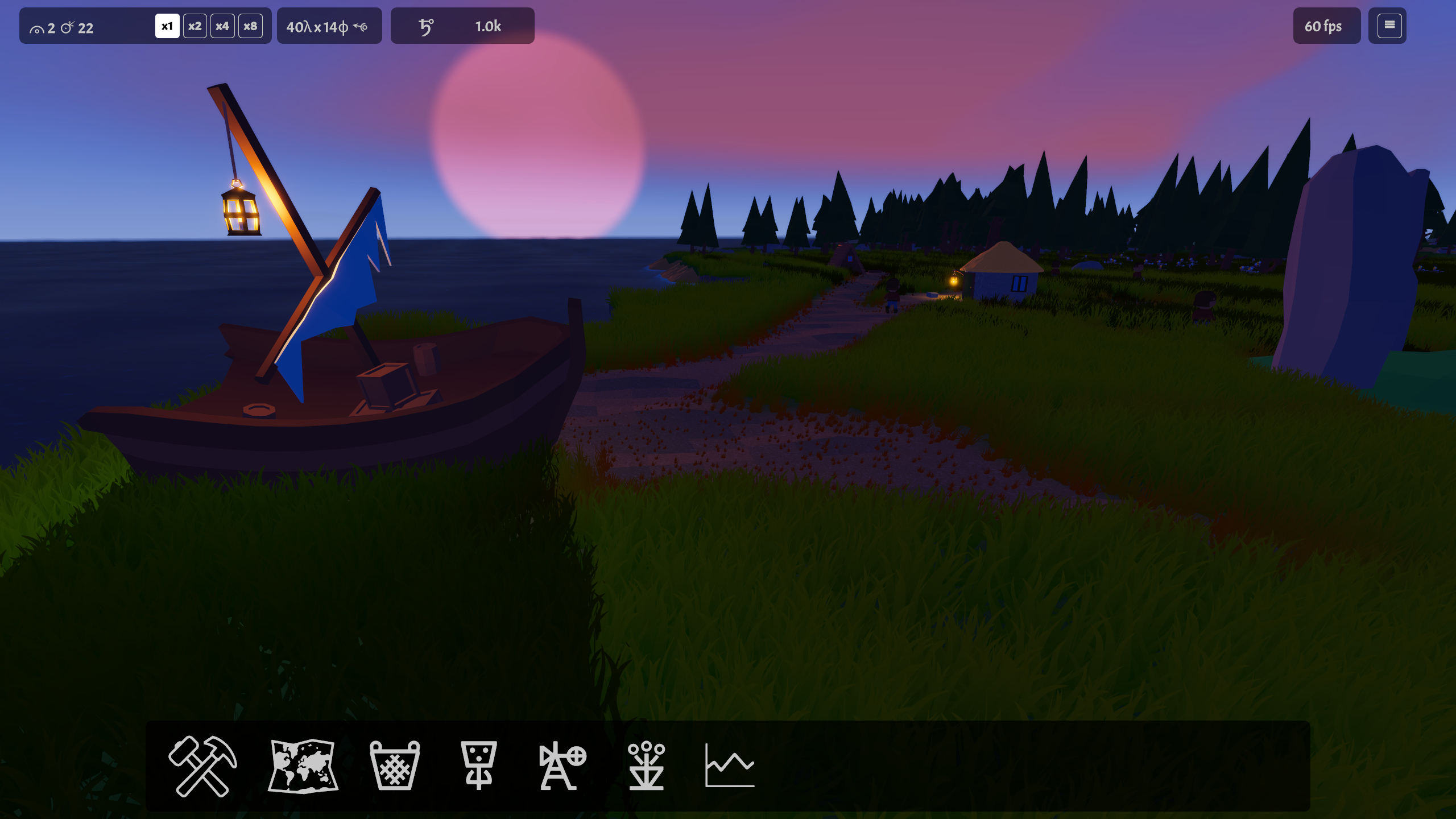

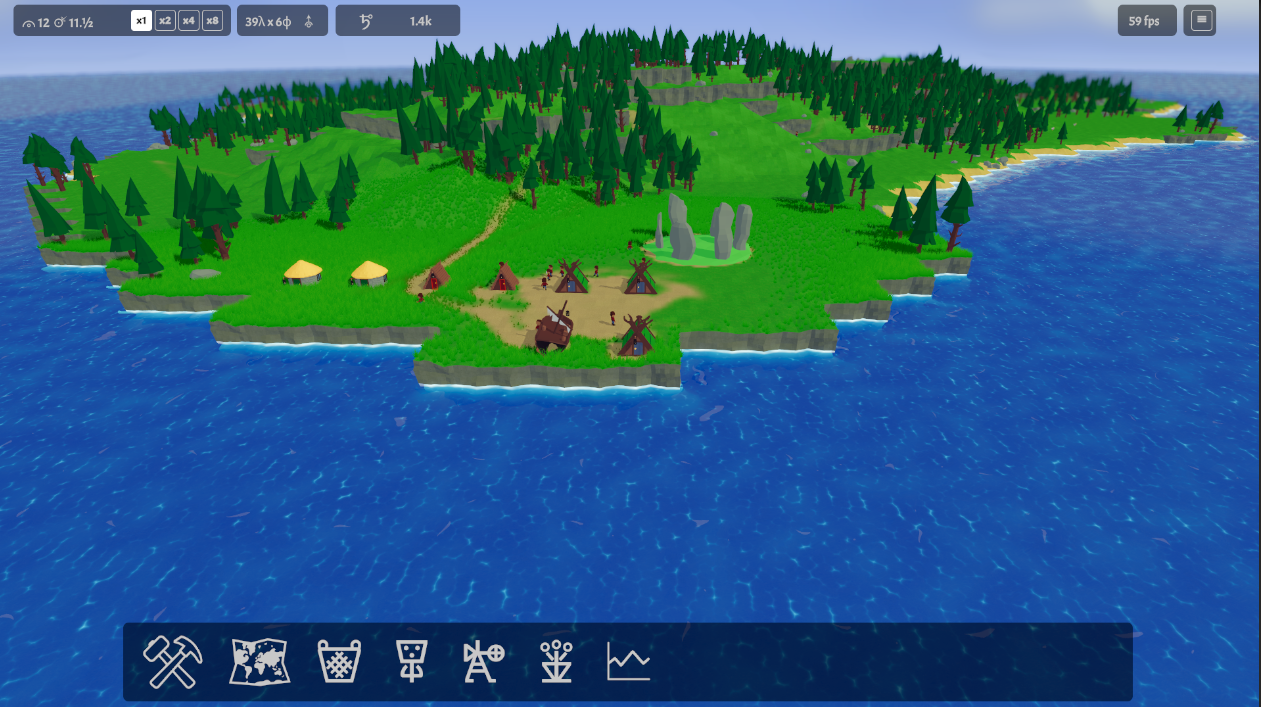

Grass is really important in the game, which revolves around some ill fated sailors who have been shipwrecked on an island. As the colonists spread out, build buildings and walk around the grass gets worn away. They can also cut the grass, grind the seeds in a windmill and make bread (because what kind of resource management game would it be if it didn’t have a farm -> windmill -> bakery supply chain!?).

As colonists and animals move around, they wear the ground by writing to a 1024x1024 texture mapped to the terrain. Wear goes on the red channel, as a single float value from 0.0 (not worn at all) to 1.0 (completely worn).

On the green channel of the same texture is another float, representing the fertility - i.e. the amount of grass - from 0.0 (no grass) to 1.0 (max grass).

A shader uses these two numbers to render the ground, using the fertility and wear level to interpolate between two colours (green and brown basically). The game is playable that way, but a flat floor is not great for getting the player to buy into the idea of grass as a precious resource!

Enter one million grass instances! Rendering a million of anything is hard work - but luckily we can use Unity’s Graphics.DrawMeshInstanced() function to draw them in batches of 1024. I won’t tell you how this works, because there are some great YouTube videos on the subject:

- Acerola’s amazing series on grass

- OverPhil Dev - the video that sparked the idea

- Switching up from a single blade to a billboard

As you can see from the screenshot, 3D grass makes the island look ten times more lifelike, and the impact of wear on the terrain seems much more real.

Batches of grass

So Graphics.DrawMeshInstanced() let’s you draw many instances of a single mesh to the screen with one draw call. We pass in a mesh, a material and a list of 4x4 matrices encoding the TRS (transform, rotation and scale) of each instance. Unity then renders them all directly on the GPU, which is super fast. Great!

But there’s a catch. We can only pass in 1023 TRS matrices per call. So to render all one million blades of grass, we’d need almost a thousand calls per frame. That’s way more than we can sensibly do. For context, running at 60fps we have 16.6ms to do everything we need to do on the CPU - and I’d like to do more than just look at grass!

So, we need to chunk the grass up into batches and we need to find a way to render only a subset of the batches we have. The rest of this post is about the different algorithms I tried to create the most optimal set of batches.

We’re aiming to optimise various competing things:

- Render as much grass as possible every frame, so the game looks good.

- Execute as quickly as possible every frame, using as little of our precious ~16ms budget as we can

- Maximise the number of grass blades we cram into every batch - don’t waste space in the TRS matrix array

- Render the smallest number of batches we can get away with, because

DrawMeshInstancedis a bottleneck - Though we’re fine doing some up-front setup when the game loads, don’t spend too long on initialisation

Algorithm 1: Simple square batches

So the algorithm which chooses the positions for the grass blades runs when the map is created. It uses a noise function, a bunch of raycasts and, critically, a set maximum number of grass blades per square metre.

The simplest chunking algorithm is to split the map into an equal number of square blocks, with their size based on the maximum number of grass instances per m^2. In the simplest case, if we were aiming for 1023 blades of grass per square meter, every batch would be 1x1m in size. Likewise if it’s 250 blades per m2, batches would be 2x2m in size…

The algorithm is what got me going with mesh instancing, and it worked fine… but it was pretty slow!

- The map is 640x640m in size - so 409600 square meters

- Attempting to place a modest 20 grass blades per m^2 means a theoretical maximum of about 8.2 million blades

- So a batch area of around 51m^2 and a batch size of 7.15x7.15m

- That gives roughly 8000 batches in total

We only render batches within 150m of the camera, and additionally limit ourselves to rendering only those in front of the camera (frustrum culling) - so we’re winning there, with only a subset of batches being sent to the GPU each frame. Typically, the code was taking about 5.3ms every frame. Not great - we’ve burned 30% of our frame budget on grass!

Algorithm 2: Collapse adjacent batches

Why was out first attempt so slow? Well the problem is that the game is set on an island. Grass doesn’t grow in the sea, it doesn’t grow on steep cliffs and, more importantly, it doesn’t grow uniformly either - there are natural areas where the grass is less dense due to lower fertility.

So when I profiled the code, I found that the majority of the batches were less than 10% full, and a good proportion were completely empty. Even filtering out draw calls for the empty batches, there was ample room for improvement.

My first attempt at solving this was to walk through the batches and merge them together. Looking at any pair of neighbour batches, if the total number of grass blades across each was less than 1024, we merge the grass data and expand the bounds, creating one new, larger batch. All this can be done during setup, meaning less batches with more grass in each.

This got me down to about 4ms per frame. Just over 20% better, and down to 23% of the frame budget. It wasn’t great, but I was bored of grass, so I left it this way for a few weeks.

Algorithm 3: Binary splitting

As I carried on coding other bits of the game, every time I ran the profiler, there was the grass instancer. Sat at the top of the list, eating a huge chunk of CPU time before anything else got a look in… Eventually, one day I snapped. I googled better ways to split the area down into sensibly sized batches and got coding.

You see, the issue with my merging algorthm (number 2) is that it’s easy to merge a couple of adjacent grid squares, but what do you do when you need to merge a whole row - more than a row? You either get underpopulated or very large batches, which both slow things down in their own way.

Skipping over a flirtation with Quad Trees which actually slowed things down, I came up with the idea of using a binary split. The code is actually generalised to split any number of times over the X or Z axes - but it turned out two was best.

private List<Batch> CreateBatchesBinarySplit(

Bounds bounds,

List<Vector3> grassBlades,

bool splitHorizontal = true)

{

var result = new List<Batch>();

int grassCount = grassBlades.Count;

if (grassCount <= BATCH_SIZE && grassCount >= minBatchSize)

{

// This batch is small enough to use

result.Add(CreateBatch(bounds, grassBlades));

}

else

{

// Split horizontal or vertical - and alternate as we recurse

var splitBatchBoundsList = splitHorizontal

? SplitBounds(bounds, 2, 1)

: SplitBounds(bounds, 1, 2);

// For each of the new bounds...

foreach (var batchBounds in splitBatchBoundsList)

{

// Find the grass blades in the smaller bounds

List<Vector3> quadGrass = new();

for (int i = 0; i < grassBlades.Count; i++)

{

if (batchBounds.Contains(grassBlades[i]))

{

quadGrass.Add(grassBlades[i]);

}

}

// Recurse and split as needed

var childBatches = CreateBatchesBinarySplit(

batchBounds,

quadGrass,

!splitHorizontal

);

result.AddRange(childBatches);

}

}

return result;

}

The function starts with the complete bounds of the island and the full set of grass blades. If there’s more than 1023 grass blades, it splits the bounds in half down the middle. We then recurse into each of these new, smaller batches and repeat the process until, at some arbitrary depth, we have less than 1023 grass blades within our bounds.

Each time we recurse, we flip from a horizontal to a vertical split - so batches alternate from being tall rectangles to wide rectangles - but stay more or less square-ish. I came up with this bit myself and was frankly quite chuffed.

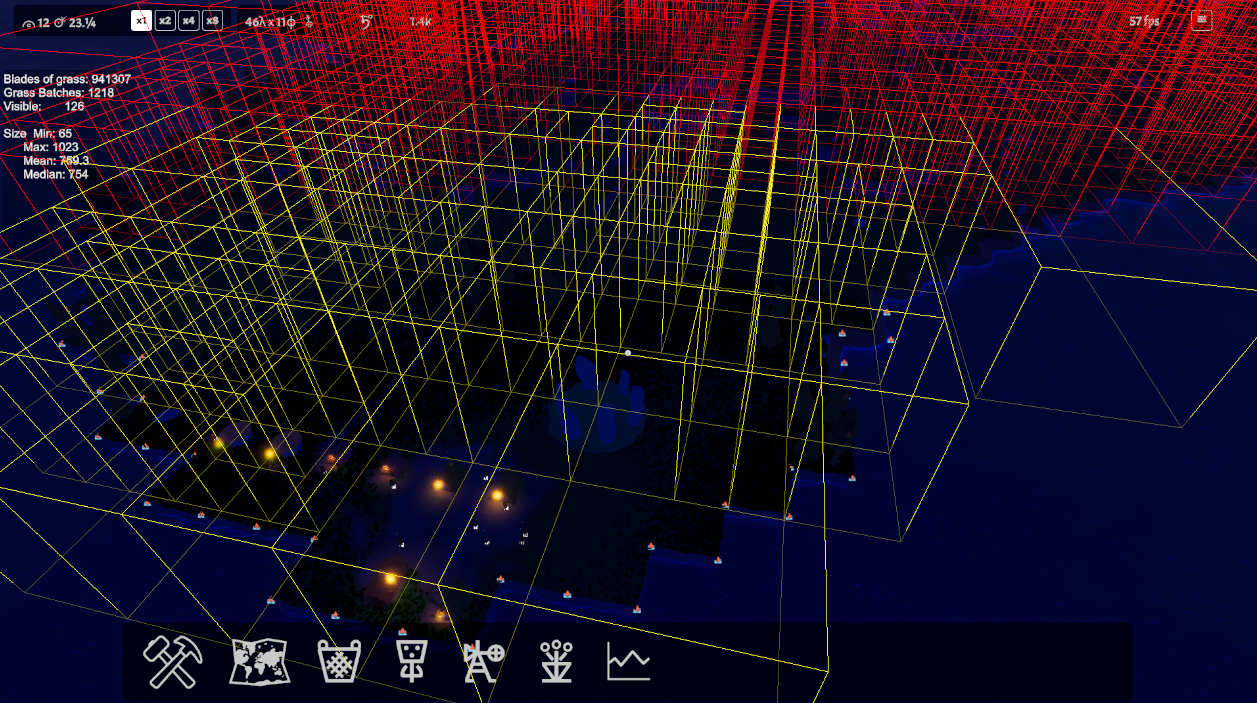

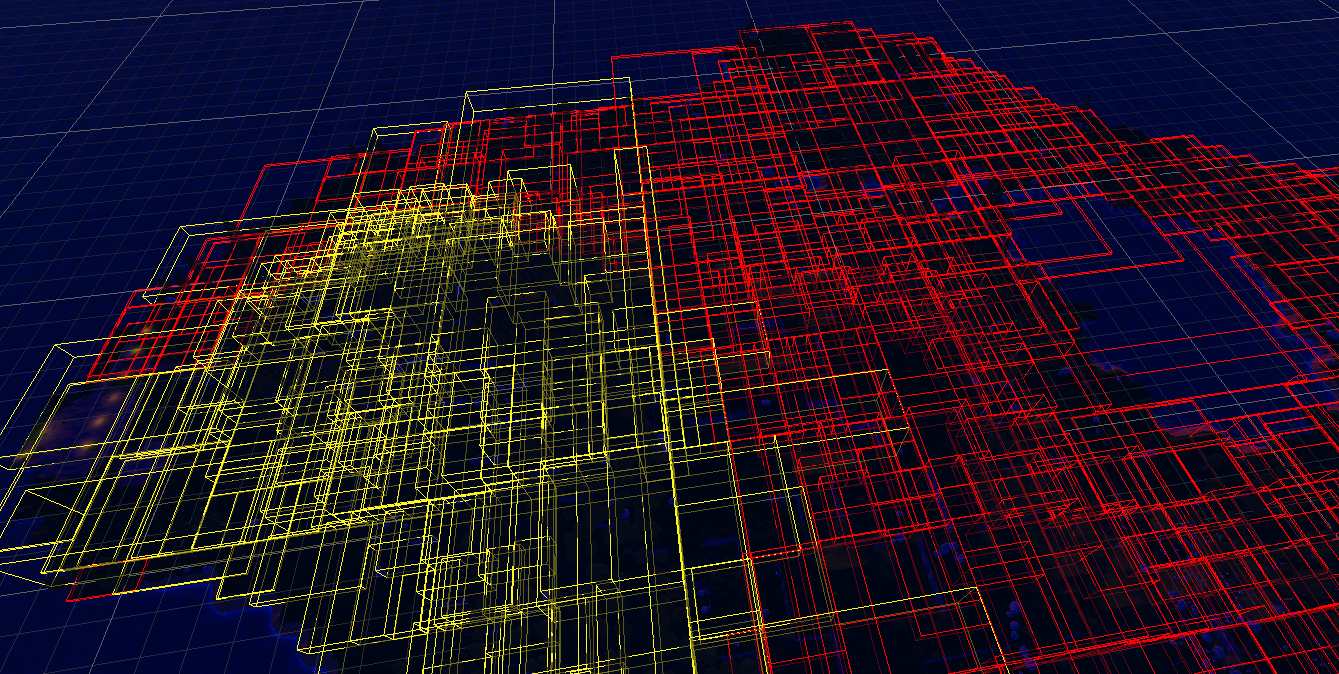

The image below shows the resulting batch bounding boxes. I waited until it got dark so you can see them more clearly!

Yellow batches are being rendered, red batches are hidden. You can see that batches near the sea shore are larger, as there’s less grass there, while batches further into the scene get quite small where the grass is dense.

Looking at the stats on the left of the pic, we have 941k grass instances total, split into 1218 batches. Based on the camera positon shown we’re rendering only 126 of those batches. Exploring the scene a bit more, I never went above 200 visible batches.

This algorithm for batching got us much better packing and a per frame timing of 2.7ms - another ~30% improvement. Once that was working, I did some optimisations to the code itself: swapping lists for arrays, using a unity Culling Group for the camera view frustrum checks, adding a movement deadzone to the camera… eventually getting down to 1.74ms. Not too shabby when compared to the original timing - we’re down to using only 10% of our frame budget!

Bonus algorithm 4: Morton ordering

I’m a software developer and it’s 2025, so faced with an optimisation problem, my first thought is to paste the code into ChatGPT and say “optimise pls”. This took more prompting than maybe I expected - it picked up a few simple things and missed the point about which parts of the algorithm needed improvement… but then it suggested something new - using a Morton Ordering.

Y’see, the real core of our chunking problem is that you can’t sort the grass blades by their X and Z coordinates at the same time. If you could do that, you could read from the list in order and just create batches, knowing that each batch contained grass blades close to each other on both the X and Z axes (it’s X and Z because Y is up in Unity BTW). In reality though, you can only sort by X or Z, which just gives you long thin batches, which are useless for our use-case.

A Morton Ordering gives us a rough approximation of this X and Z ordering by doing some funky bit-bashing.

Basically, the Morton Ordering takes the normalised X and Z coordinates, multiplies them up and converts to an integer. Multiplying by a big number before rounding preserves some of the detail after the decimal place. Once it has two big integers which correlate to the X and Z coordinates, we shuffle the individual bits of each integer together - creating a big number which approximates both X and Z.

The bits end up shuffled like this: x0, z0, x1, z1, x2, z2 ... with X’s most and least significant bits adjacent to Z’s most and least significant bits.

We can then sort by the Morton number and just read batches off the list in 1023 chunks! Pretty cool huh?

Here’s the code:

private List<Batch> CreateBatchesMorton(Bounds mapBounds, List<Vector3> grassBlades)

{

var batches = new List<Batch>();

grassBlades.Sort(

(a, b) => Morton(a, mapBounds).CompareTo(Morton(b, mapBounds))

);

for (int i = 0; i < grassBlades.Count; i += BATCH_SIZE)

{

int n = Mathf.Min(BATCH_SIZE, grassBlades.Count - i);

var chunk = grassBlades.GetRange(i, n);

var bounds = ComputeBounds(chunk);

batches.Add(CreateBatch(bounds, chunk));

}

return batches;

}

public static uint Morton(Vector3 pos, Bounds bounds)

{

// Normalise position within bounds

float nx = Mathf.InverseLerp(bounds.min.x, bounds.max.x, pos.x);

float nz = Mathf.InverseLerp(bounds.min.z, bounds.max.z, pos.z);

// Quantize to 16 bits

uint ix = (uint)(Mathf.Clamp01(nx) * 65535f);

uint iz = (uint)(Mathf.Clamp01(nz) * 65535f);

// Interleave and return

return (SpreadBits(iz) << 1) | SpreadBits(ix);

}

// Spreads the bits of a 16bit number over 32 bits with 0's between

private static uint SpreadBits(uint x)

{

x &= 0x0000FFFF; // only the lower 16bits have anything in 'em

x = (x | (x << 8)) & 0x00FF00FF;

x = (x | (x << 4)) & 0x0F0F0F0F;

x = (x | (x << 2)) & 0x33333333;

x = (x | (x << 1)) & 0x55555555;

return x;

}

And here is a picture of the batches created. As you can see… they are a bit messy. We’ve lost the nice crisp edges and introduced a bunch of overlap.

Looking at the stats though, we can see that even with the batches overlapping, we’re still getting slightly better performance. And I do mean slightly!

| B-Split | Morton | |

|---|---|---|

| Per frame time | 1.74ms | 1.63ms |

| Batches | 1218 | 921 |

| Min batch size | 65 | 147 |

| Median batch size | 754 | 1023 |

| Max batch size | 1023 | 1023 |

So the Morton approach is faster… but only by 0.1ms per frame. Maybe a 5% improvement if we’re being generous.

The issue is how we’re balancing between drawing the smallest number of batches possible each frame, versus making sure each of those batches is full with grass - to maximise draws per DrawMeshInstanced call.

Conclusions

In the end I couldn’t decide which algorithm to use, so I added a dropdown to select between Morton and B-split. The reason is the one row of the table I didn’t show above…

| B-Split | Morton | |

|---|---|---|

| Loading/setup time | 4.2s | 14.2s |

Bit bashing might be quick, but sorting a list of a million elements is slow! An extra 10 seconds on load time is a heavy price to pay for a 0.1ms performance improvement per frame.

So what did we learn:

- Optimisation is fun! I really enjoyed this and I have no doubt I’ll be back to try some new algorithm in future.

- ChatGPT taught me that you can sort by two axes at the same time - just not perfectly accurately!

- Grass is a lot trickier than it looks!

This article has covered the ins and outs of the CPU side of my game’s grass drawing system. It has not covered the other half of the picture - what happens on the GPU every frame… maybe that’ll be the next blog I write!